Hi everyone, today we are going to look at Tokenization in Large Language Models (LLMs). Sadly, tokenization is a relatively complex and gnarly component of the state of the art LLMs, but it is necessary to understand in some detail because a lot of the strange things of LLMs that may be attributed to the neural network or otherwise appear mysterious actually trace back to tokenization.

So what is tokenization? Well it turns out that in our previous video, Let's build GPT from scratch, we already covered tokenization but it was only a very simple, naive, character-level version of it. When you go to the Google colab for that video, you'll see that we started with our training data (Shakespeare), which is just a large string in Python:

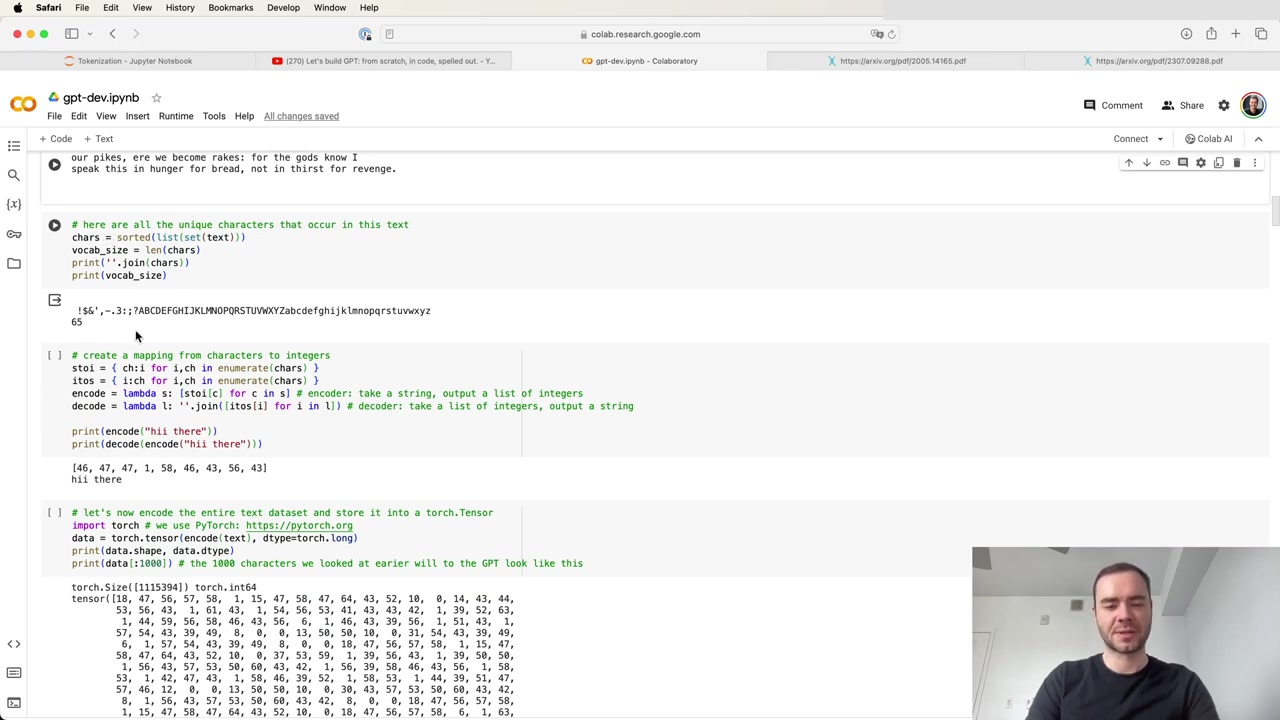

But how do we feed strings into a language model? Well, we saw that we did this by first constructing a vocabulary of all the possible characters we found in the entire training set:

# here are all the unique characters that occur in this text

chars = sorted(list(set(text)))

vocab_size = len(chars)

print(vocab_size)

print(''.join(chars))

In practice, state-of-the-art language models use a lot more complicated schemes for constructing token vocabularies. They deal on the chunk level rather than the character level, and these character chunks are constructed using algorithms such as the byte pair encoding algorithm, which we will cover in detail in this video.

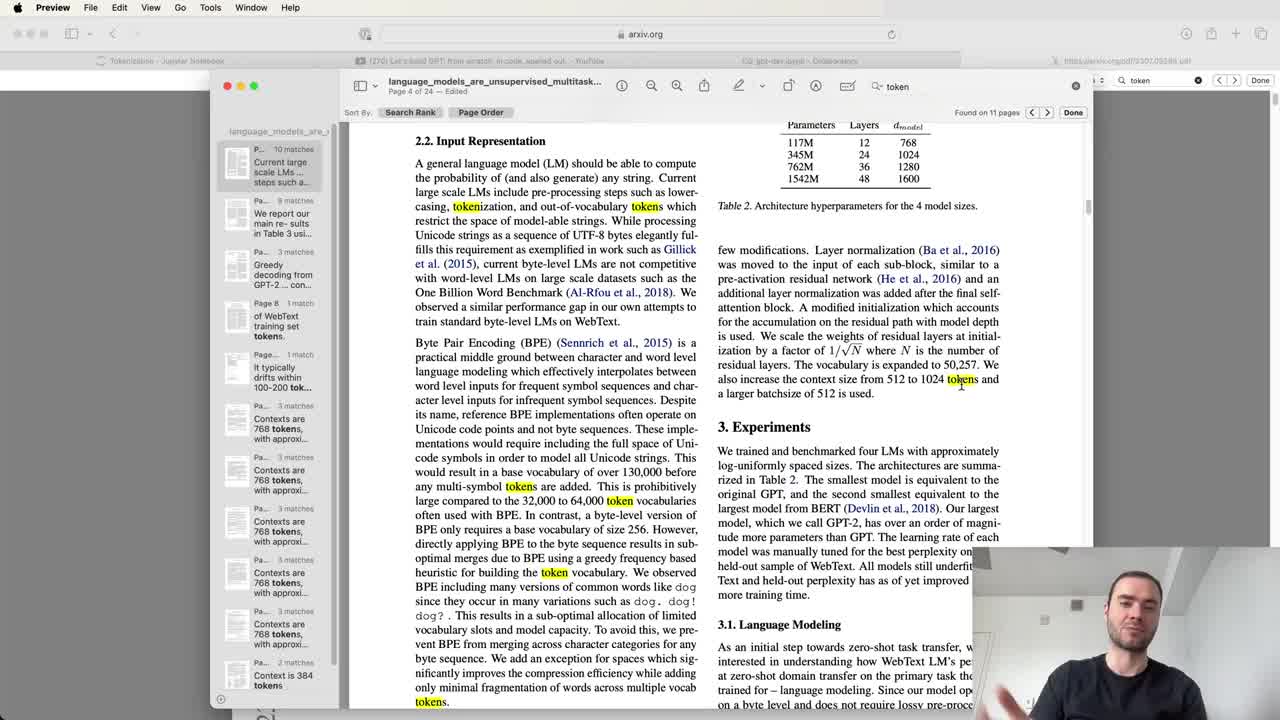

The GPT-2 paper introduced byte-level encoding as a mechanism for tokenization in the context of large language models:

Tokens are the fundamental unit of large language models. Everything is in units of tokens and tokenization is the process for translating strings or text into sequences of tokens and vice versa.

Tokenization is at the heart of much weirdness in LLMs. A lot of issues that may look like they are with the neural network architecture or the large language model itself are actually issues with the tokenization. For example: